Adjacent Beam Classifier (ABC) pipeline

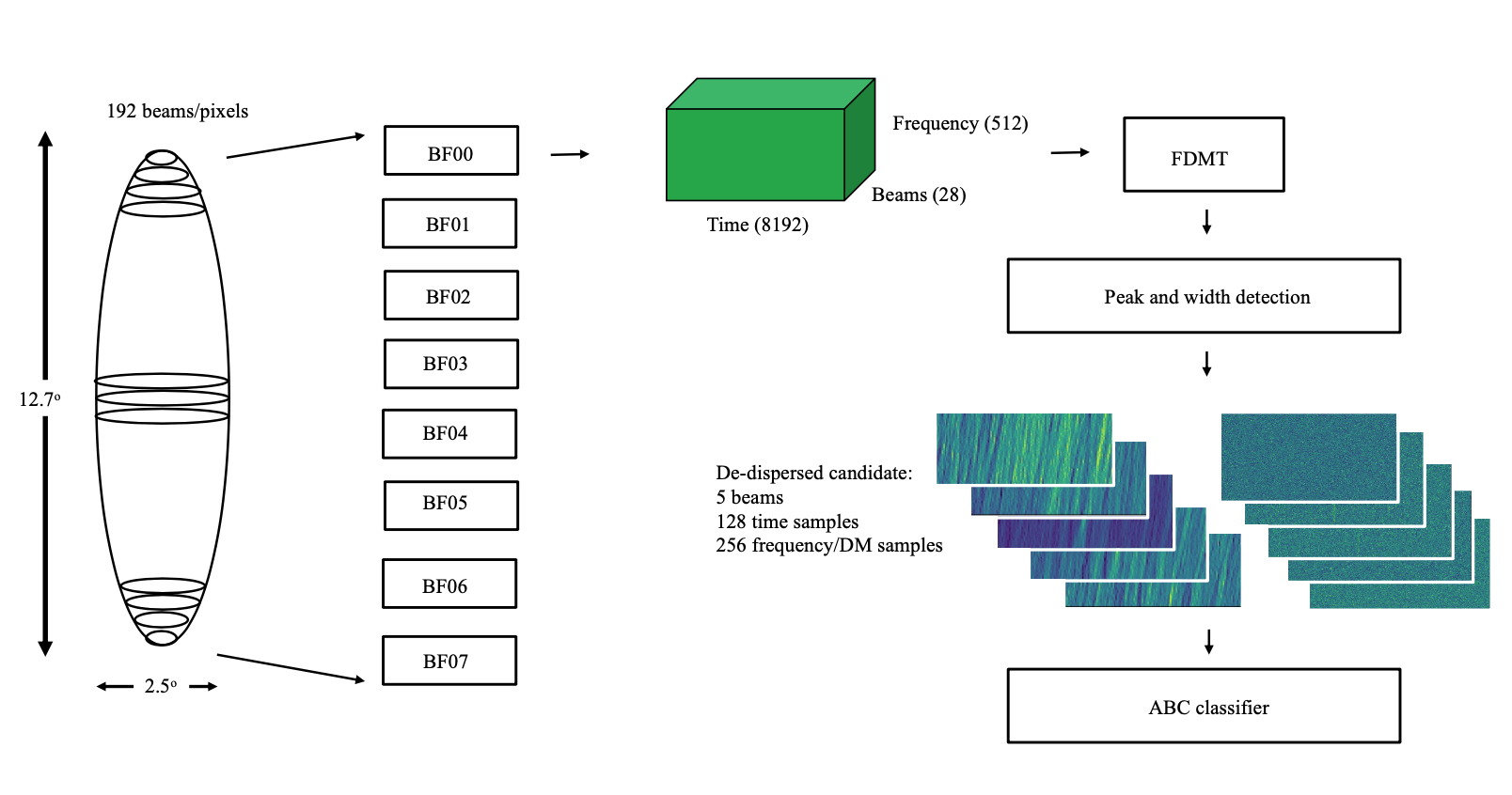

We used the UTMOST-NS interferometric system to find FRBs. It had a field of view of 2.5 X 12.7 deg. This view was divided up into 192 beams (or pixels).

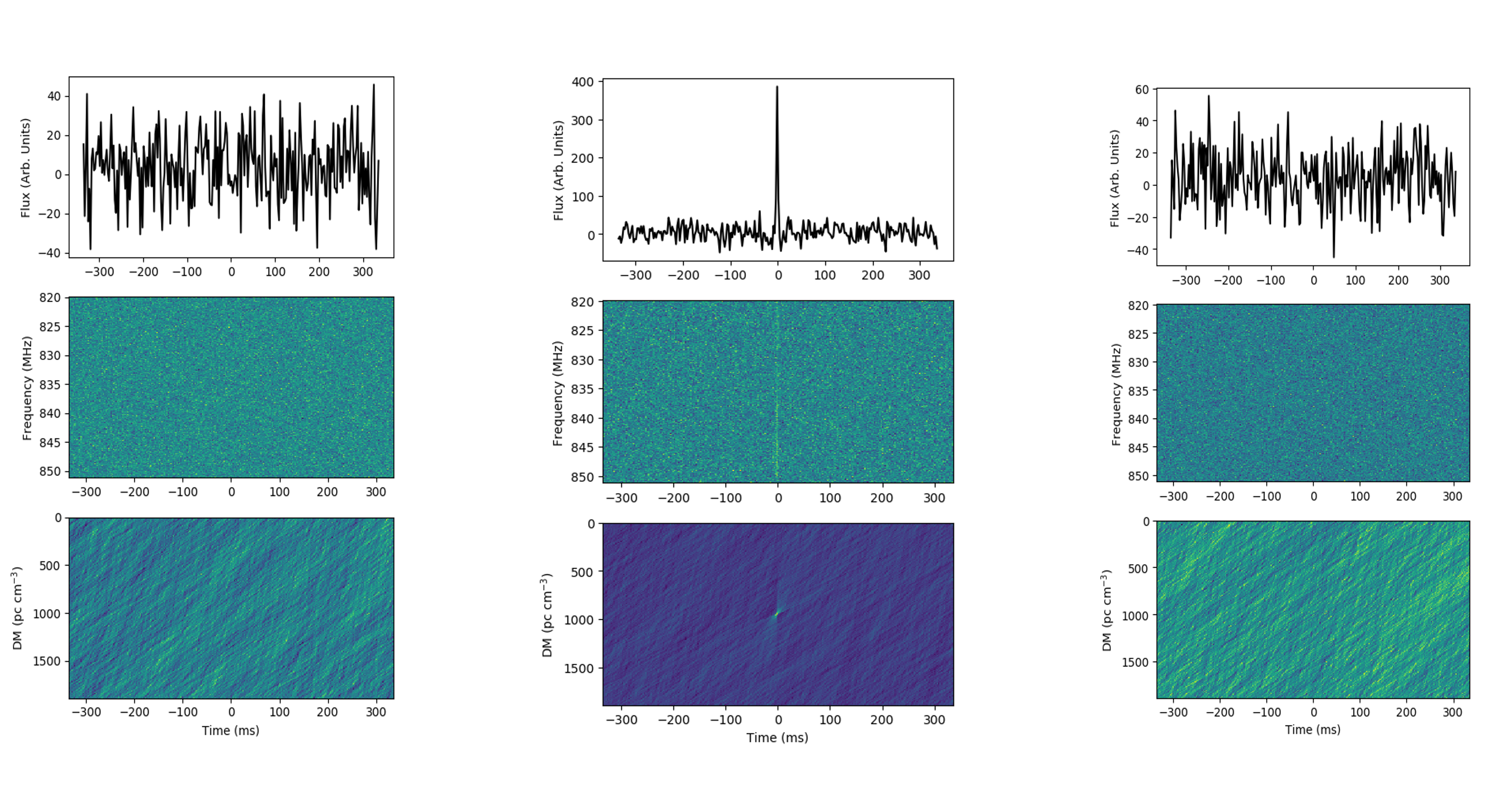

For the first time, in our search for FRBs, spatial information (dynamic spectrum from adjacent beams) was used in candidate classification. A celestial signal has a plane wavefront, and hence is present in just one of the beams. A Radio Frequency Interference (RFI) signal, which is just a false positive candidate that results from phone calls, however has a curved wavefront, and hence is present in multiple beams (see below).

Utilizing this information, a classification model was implemented using a stream-processing framework that operates on GPUs as a block after a candidate production stage. It took ∼1.1 s to pre-process the data and to classify the candidates from each 2.7 s data-block (for 24 beams) on a GTX TITAN-12 GB GPU. A single evaluation from the CNN classifier took merely ∼1.9 ms (per candidate) with a 45 ms of pre-processing time. Hence the pipeline could perform classifications in near real-time.

The radio frequency data was chunked up across 8 GPU-enabled nodes for pre-processing. Each GPU enabled node thus processed 24 beams. Data blocks of dimension: 512 X 8192 X 24 (frequency samples X time samples X beams) are fed to a GPU on each node. Candidates for classification are fed through a pre-processing pipeline. For more information about the pre-processing steps, please refer to my thesis!

The pre-processed data is then sent to a CNN based classification system, with the information from the adjacent beams. It classified the candidate as an FRB or RFI.

The final precision of the pipleline was 99.99%, with a recall of 100% for candidates with a Signal to Noise Ratio (SNR) of greater than 15, 97% for candidates with SNR between 10 to 15 and 80% for candidates with SNR between 8 to 10.